How will we instruct AI?

And how it might be its own "language" before we know it

Why make this series?

From getting asked by friends and family what a "GPT" is to many late nights thinking about how AI might replace me one day, I went down a deep rabbit hole of how the newest models work and more specifically how to make them do what we want. After reading more GitHub READMEs than I care to admit, I discovered a lack of resources in understanding how to control these models. While the study of "prompt engineering" (or "prompt design") is the beginning of understanding this control, like anything new, there felt like a real lack of standardization in the area. Like everyone was off doing their own thing and reinventing the wheel every other week. As a million questions flew around my head I kept coming back to the same core question: Will there one day be a standard language for instructing AI? A "Standard Instruction Language" (SIL) you might say?

Now people in the prompt engineering and AI space might say, "But these languages were built upon learning a natural language! It doesn't need a special one." So maybe it isn't a new "language". Maybe more of a "flavor" of sorts. How bold can have a different expression as bold in a sentence, while being the same language.

In this series, I'll be discussing prompt engineering with a focus on understanding if a standard language is worthwhile and if so, who is creating it, why are they doing so, and what will it look like.

Prompt Engineering 100.1

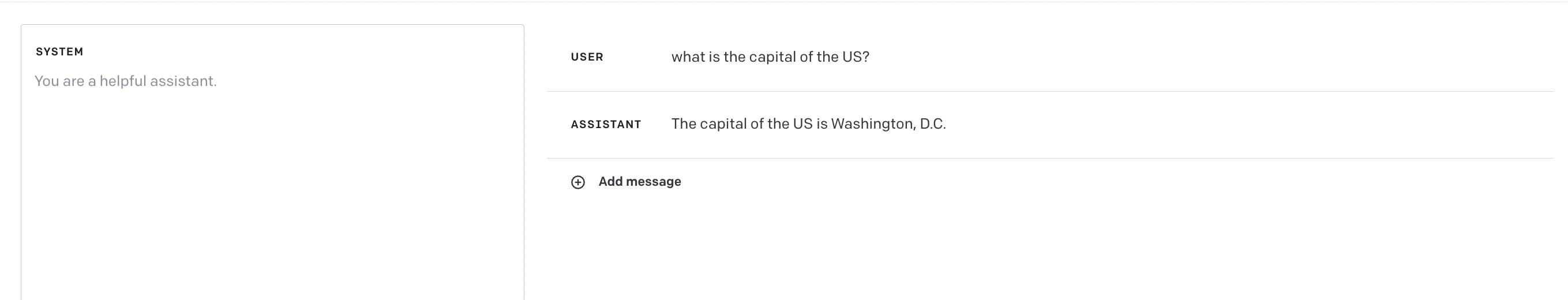

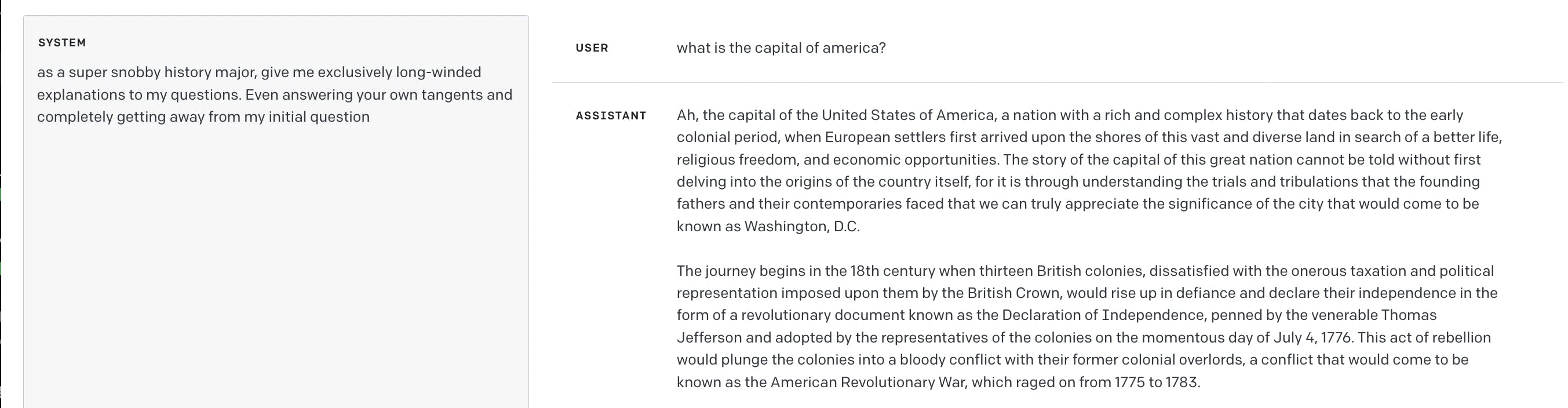

I will keep this short with two pictures to express what prompt engineering is and looks like so the rest of the article can be read by someone unfamiliar with it at this point.

Both images are asking GPT-4 (think ChatGPT) the same question but with different "system messages" (prompts) and therefore different answers. Look through the two to see how my prompt, caused the model to act differently given all the other inputs were the same. This one is simple but making these prompts as clear and effective as possible, as they grow in complexity, is prompt engineering.

Is standardizing a language for GPTs possible?

In short, I'm 100% unsure... but it seems possible.

Let's start with the "language" part. Computers are already incredible at understanding and communicating information (given a known format) and isn't language just another format to communicate information? Maybe this new "language" is closer to a standard format for writing natural language than a new language itself.

As for standardization, computer science has a rich history of standardization and is what has allowed bleeding-edge tech to blossom and become ubiquitous to the point that we barely notice it anymore. While there are constant cycles of how much standardization exists in different areas of computer science, I think more makes sense in a time like now where there are almost none.

So let's just say I have high hopes.

Me, My Computer, and I

When I first started thinking about human-to-computer interactions, my mind initially went to the obvious answer of coding languages! But as I thought more about it, I thought of another type of "language" that has become quite standardized, markup languages. With industry standards like Markdown and HTML (HyperText Markup Language) we've already shown the want for using a standard language to display natural language across computers to billions of people. Easy to write and easy to read! But... it doesn't have much logical power to it.

So now let us bring in the logical powerhouse, a coding language. Languages like Python, C, Javascript, and many more are quite powerful but can have a pretty steep learning curve and do feel more like learning a different language (especially for non-English speakers). While harder to learn and master, the benefits of them are amazing and at the end of the day, they all command computers in a repeatable and deterministic way. A Python script on one computer can compute the same information on any other computer (with a similar enough setup).

So what if we could combine the two and use natural language to command information to GPTs? Instead of bolding text so we as humans visually see it as more important, what if we "bolded" instructions to a computer to say "This is the most important part of the instructions"? Or used headers to show sections of instructions and communicate the grouping of topics more efficiently?

And if we did do this, could GPTs use this language with each other?

Can a computer talk? 🤷

While hearing the words "computers" and "talk" may sound weird, they're already doing a version of it every day. Alright maybe not talk but let's say communicate. Communicating across standard formats power everything we know from our fondest family photos, to our favorite music, to the shows we love. But how does that get us to a language?

Althought it isn't a language, file formats like PNG and JPG allow our phones to express a picture it took and pretty much any computer in the world can understand it. Communication standards like Bluetooth allow our phones to express music to earphones and those earphones understand it well enough to play it. And hardware standards like HDMI allow my TV to understand how to show a soccer game from my computer is being expressed. Just computer to computer, but they're able to understand each other's expression of information given the format is correct.

So what if this new language was the standard format across GPTs? What if models knew how to use this format to express themselves and understand each other better? With this, we can create more efficient agents. Maybe we monitor the best agents we have today and begin to learn the format they already think is best for themselves.

So where does this leave us?

Let us step back and visit why we code. Coding is based on the idea of implementing algorithms. which are:

"a process or set of rules to be followed in calculations or other problem-solving operations"

With a set process or set of rules typically comes predictability and the outcome(s) being deterministic from the start. But this isn't always needed to solve a problem. For things like recommending a movie, playing a video game, or transcribing a meeting, we don't need to have the same outcome every time, just that it's "good enough" for the task. Sometimes we even prefer it to NOT be deterministic.

For these situations, GPTs can be the bridge between coding languages and markup languages for non-deterministic use cases. A way for computers to express themselves and be understood when direct orders aren't necessary. Creating a new language that has the ease-of-writing present in markup languages with the logical power, but not deterministic nature, of coding languages.

By creating a standard way to talk to these models and for the models to talk between themselves, we can instruct GPTs in a more efficient way for applications where the level of randomness is "good enough" or even preferred. And hopefully, this language can assist in the efforts to grow the (optional) predictability over time so it can be used for more and more deterministic work.

Thanks for reading!

As always I love hearing feedback about my writing to make it better for you! Always feel free to leave a comment! I hope this starts as a good bedrock to build upon in our journey toward learning about the possibilities of a standard instruction language.

NOTE: For anyone curious, images in this series are generated using DALLE-2

P.S: Between the time I started writing this paper and published it, Microsoft released guidance in what seems to be the first "guidance" language of its kind. Wanted to leave it here for now but will be doing a full article on it at a later date!